Artificial intelligence (AI) refers to a group of tools that let machines perform complex tasks, such as recognizing visuals, interpreting and converting speech and text, examining information, offering suggestions, and carrying out other high-level operations. AI supports breakthroughs in tech, science, healthcare, and other fields, benefiting people and institutions.

Core Highlights

- AI works by analyzing large amounts of data with machine learning algorithms and neural networks to recognize patterns, make predictions, and improve performance over time.

- AI offers continuous, precise, and efficient automation that reduces human error, eliminates repetitive tasks, and speeds up research.

- AI presents challenges like data vulnerabilities, model manipulation, operational failures, and ethical and legal risks that require strict oversight and security.

Definition of Artificial Intelligence (AI)

Artificial intelligence involves designing and building computer programs that handle tasks previously performed only by humans, like understanding speech, making choices, and spotting trends. The term “AI” covers many fields and tools, including machine learning models, deep learning algorithms, and natural language processing (NLP).

People often use the term to refer to various modern technologies, but many question whether these computer systems truly qualify as artificial intelligence. Some believe that most tools in use today represent sophisticated forms of machine learning, serving only as a stepping stone on the path toward genuine artificial intelligence, also known as artificial general intelligence (AGI), and artificial superintelligence (ASI).

While debates continue over whether genuinely intelligent machines exist, most people today use the term “AI” to describe a collection of machine learning-based tools, like ChatGPT and computer vision, which allow systems to handle tasks like writing text, driving vehicles, and examining information.

How Does Artificial Intelligence Work?

Although methods differ among AI approaches, data remains the foundation. AI tools grow and adapt by processing massive datasets, uncovering trends and connections that people might overlook.

This growth often depends on algorithms, which are defined sets of steps and instructions that steer how AI systems analyze data and make choices. In machine learning, these algorithms train on labeled and unlabeled data to forecast outcomes and sort information.

Deep learning, a more advanced field, employs artificial neural networks with multiple layers that resemble the structure and behavior of the human brain. As these computer systems absorb more data and refine their models, they gain greater skill in handling specific tasks, such as identifying images and converting languages.

The 3 Main Types of Artificial Intelligence

Although there are many different kinds of AI, three main types stand out as the most commonly discussed versions: artificial narrow intelligence, artificial general intelligence, and generative AI. Let’s examine each type of artificial intelligence in turn.

Artificial Narrow Intelligence

Narrow AI executes focused tasks like speech recognition and visual data processing. It represents the most ubiquitous form of AI. Common examples include smartphone voice assistants like Siri, Alexa, and Google Assistant, content suggestion systems used by Netflix, Disney+, and Amazon Prime, and the intelligent software that operates autonomous cars.

Narrow AI completes functions that used to require human intelligence, but only works within defined boundaries and focuses on a singular purpose. It lacks awareness and true comprehension, relying instead on coded instructions and learned patterns from data. For instance, image-recognition AI detects items in pictures because developers trained it on labeled image datasets, not because it grasps the concept of an object.

The term “narrow AI” distinguishes this kind of artificial intelligence from Artificial General Intelligence (AGI), also called “strong AI.” AGI describes a system capable of grasping concepts, learning from experience, and applying knowledge to disparate tasks, similar to human thinking. In comparison, Narrow AI focuses on a single function and can not operate beyond its programmed, trained purpose.

Narrow AI performs specific tasks more efficiently and accurately than humans. It can work 24/7 without breaks, does not draw a salary, and processes large amounts of data quickly. It proves particularly useful for repetitive, time-consuming, and dangerous tasks.

Artificial General Intelligence (AGI)

Artificial general intelligence is a hypothetical idea where machines match or even surpass human intelligence. In essence, AGI represents the AI often portrayed in science fiction literature and film.

Experts still disagree on how to define or identify artificial general intelligence. One of the most well-known methods for testing machine intelligence is the Turing Test, also called the Imitation Game. Alan Turing, a pioneering mathematician, computer scientist, and codebreaker, introduced this concept in a 1950 paper on machine intelligence. In the test, a human interrogator exchanges messages with both a person and a machine, without knowing which is which. If the interrogator struggles to tell them apart, Turing argued that examiners could consider the machine intelligent.

To make things murkier, scientists and philosophers still debate whether we are approaching AGI, still decades away from AGI, or even capable of achieving AGI at all. For instance, a recent paper by Microsoft Research and OpenAI claims that ChatGPT-5 may represent an early version of AGI. However, many experts doubt the thesis and suggest the claims served only to generate attention rather than reflect real progress.

Generative Artificial Intelligence

Generative AI, often shortened to “Gen AI,” describes deep learning systems that, based on a user’s inputs and commands, produce original, complex content, such as detailed text, high-resolution images, lifelike video, and audio.

In a basic sense, generative models build a simplified version of their training data and use that internal structure to produce new outputs that resemble, but do not duplicate, the original material.

For a long time, generative models have enjoyed significant use in statistics for examining numerical data. However, in the past ten years, they have advanced to ingest, analyze, and produce more complex forms of data. This shift happened alongside the rise of three powerful deep learning model types:

- Diffusion models — Appeared in 2014 and work by gradually adding random noise to images until they become unrecognizable, then reversing the process to generate new, original images based on user prompts

- Transformers models — Trained on sequential data to produce long sequences of output like words in a sentence, shapes in an image, lines of code, and video frames, powering many of today’s leading generative AI tools: ChatGPT, Claude, Gemini, and Midjourney

- Variational Autoencoders (VAEs) — Introduced in 2013, and allow models to create multiple versions of content based on a given input or command

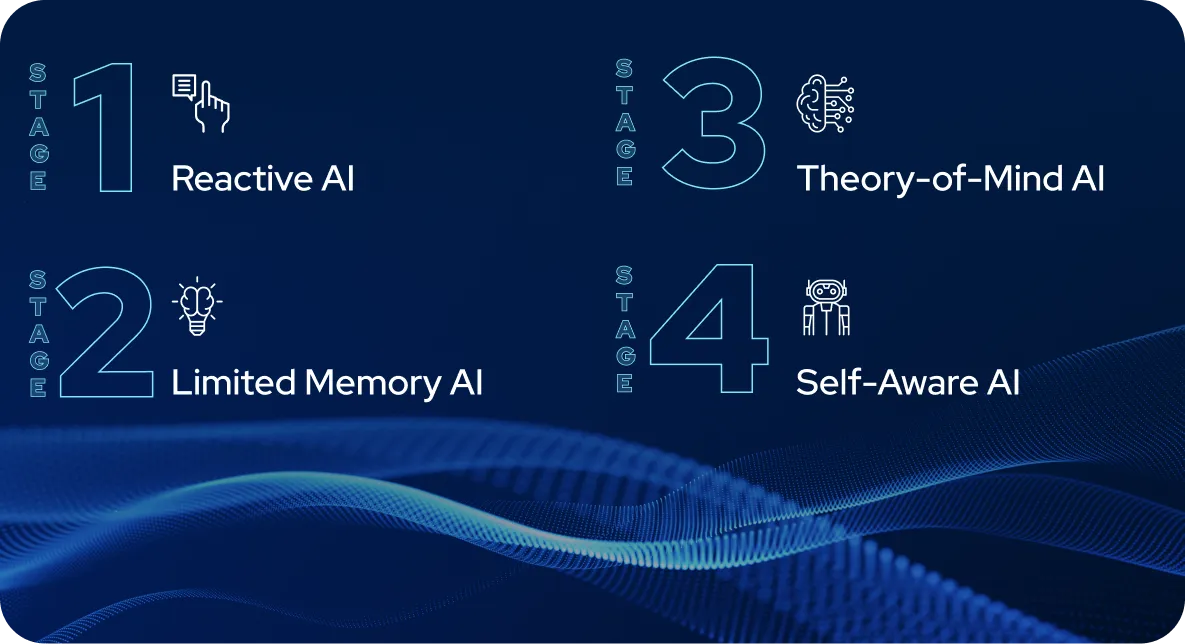

The 4 Stages of AI Development

As scientists develop more advanced types of artificial intelligence, they also need to form deeper, more precise definitions of intelligence and consciousness. To explain these ideas, experts have identified four categories of artificial intelligence.

Stage One: Reactive AI

Reactive machines represent the simplest form of artificial intelligence. Systems designed this way lack memory of past events and instead respond solely to what they encounter in real time. They complete specific complex activities within a tight range, like playing chess, but can not operate beyond their restrictions.

Stage Two: Limited Memory AI

Machines with limited memory have a partial grasp of previous events. They engage with their surroundings more effectively than reactive systems. For instance, autonomous vehicles rely on limited memory to navigate turns, monitor nearby traffic, and change speed. Still, these machines do not build a full picture of the world, since they store only short-term data and apply it within a brief timeframe.

Stage Three: Theory-of-Mind AI

Theory-of-mind machines mark an early step toward artificial general intelligence. In addition to building models of their environment, these systems also recognize and interpret the thoughts, emotions, and intentions of other agents. However, computer scientists have not yet achieved this level of intelligence.

Stage Four: Self-Aware AI

Self-aware machines represent the most advanced theoretical form of AI. These systems would understand their environment, other beings, and their own existence. The concept aligns with what most people imagine when discussing the arrival of AGI. As of now, it remains a distant goal.

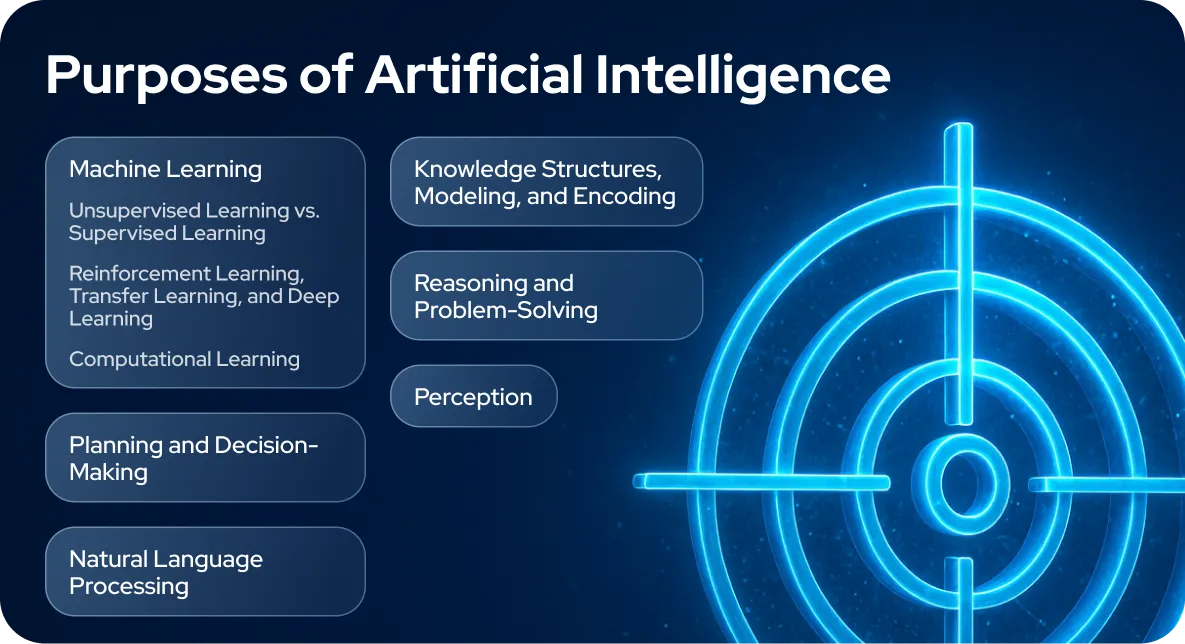

Purposes of Artificial Intelligence

Researchers have divided the broad challenge of creating artificial intelligence into several smaller subproblems. These challenges focus on specific abilities and behaviors that AI is expected to demonstrate. Let’s examine some of the desired traits that have drawn the most attention from computer scientists, outlining the main areas of AI research.

Knowledge Structures, Modeling, and Encoding

Knowledge representation and knowledge engineering permit AI systems to respond intelligently to questions and draw conclusions about real-world information. Structured knowledge formats support content-based search and retrieval, scene analysis, medical decision-making, data mining, and insights generation.

A knowledge base represents a collection of information structured so that software can easily process and use it. An ontology defines the objects, concepts, properties, and relationships relevant to a specific field of knowledge. Knowledge bases must capture elements such as objects and their attributes, categories, relationships between entities, events, conditions, timelines, cause-and-effect, meta-knowledge, and default assumptions, among others.

Some of the toughest challenges in knowledge representation include the vast scope of commonsense knowledge and the way much of that basic knowledge exists in sub-symbolic form, meaning it is not available as clear, verbal, or written statements. Another major hurdle involves knowledge acquisition, which entails collecting and organizing information.

Machine Learning

Machine learning deals with developing programs that automatically heighten their performance on specific tasks over time. It has remained a core element of artificial intelligence since the field first emerged. Machine learning includes several types: unsupervised learning, supervised learning, reinforcement learning, and computational learning.

Unsupervised Learning vs. Supervised Learning

Unsupervised learning examines raw data, identifies patterns, and makes predictions without labeled examples. Supervised learning, on the other hand, relies on training data that includes correct answers. It has two main forms: classification, where the system learns to assign inputs to categories, and regression, where it learns to predict numerical values based on numerical input.

In supervised learning, the training data includes labels that show the correct answers, guiding the model as it learns. In contrast, unsupervised learning works with unlabelled data, and the model uncovers patterns or structures on its own. Now that we understand the difference between supervised and unsupervised learning, let’s dig a little deeper into more complex forms of learning.

Reinforcement Learning, Transfer Learning, and Deep Learning

In reinforcement learning, an agent receives rewards for desirable actions and penalties for undesirable ones. Over time, it learns to select responses that lead to positive outcomes. Transfer learning involves applying knowledge gained from solving one task to a different but related task. Deep learning, a branch of machine learning, processes data using artificial neural networks inspired by the human brain and supports all these learning types.

Computational Learning

Computational learning theory evaluates learning systems based on factors like computational complexity, the amount of data needed, and various measures of optimization performance.

Natural Language Processing

Natural language processing permits software to read and generate human language. Key challenges in this field include speech recognition, retrieving data, text-to-speech generation, language translation, extracting relevant information, and answering questions.

Modern deep learning techniques in natural language processing include word embeddings, which represent words as vectors that capture their meanings, and transformers, a neural network design that uses attention mechanisms to process sequences. In 2019, generative pre-trained transformer (GPT) models started producing fluent, coherent text. By 2023, these models had achieved human-level performance on tests like the bar exam, SAT, ACT, GRE, LSAT, and other academic examinations.

Perception

Machine perception refers to an AI system’s ability to interpret input from sensors like cameras, microphones, radar, sonar, lidar, wireless signals, and thermal and touch sensors to understand its surroundings. Computer vision focuses specifically on processing and analyzing visual data. This area includes tasks such as speech recognition, image classification, facial and object recognition, object tracking, and sensory perception for robots.

Planning and Decision-Making

An AI agent is any technology that senses its environment and takes actions in response. A rational agent acts based on goals and preferences, exhibiting behaviors that help achieve its aims. In automated planning, the agent works toward a defined goal. In automated decision-making, the agent evaluates different scenarios based on preferences, seeking to reach favorable outcomes and avoid undesirable ones. To meet its preferences, the agent assigns a value to each situation, reflecting how desirable it is. By estimating the expected utility for each possible action, based on outcome probabilities and their assigned utility values, the agent selects the action with the best expected result.

In classical planning, the agent has full knowledge of the outcome of every action. However, in most real-world situations, the agent often faces uncertainty because it may not fully understand its current environment and can not predict the exact result of its actions. Instead, it must make choices based on probability and then reevaluate the environment to determine whether the chosen action worked.

In certain problems, an agent’s preferences may remain unclear, particularly when other agents or humans comprise part of the environment. The agent learns new preferences through inverse reinforcement learning and by gathering new information to refine them. Information value theory helps AI estimate the benefit of exploring and testing different options. Since the number of possible future actions and scenarios is often too big to fully compute, agents must make decisions and assess outcomes while dealing with uncertainty.

Reasoning and Problem-Solving

Early AI researchers created algorithms that mimicked the step-by-step reasoning humans use to solve problems and draw conclusions. By the late 1980s, new approaches emerged to handle uncertainty and incomplete data, incorporating ideas from probability theory and economic models.

Many of these algorithms struggle with large-scale reasoning tasks because they slow down drastically as the problem size increases. Plus, people seldom rely on step-by-step logic like the kind early AI systems tried to replicate. Instead, humans often make quick, intuitive decisions. Achieving reasoning that is accurate and efficient remains an open challenge in the development of artificial intelligence.

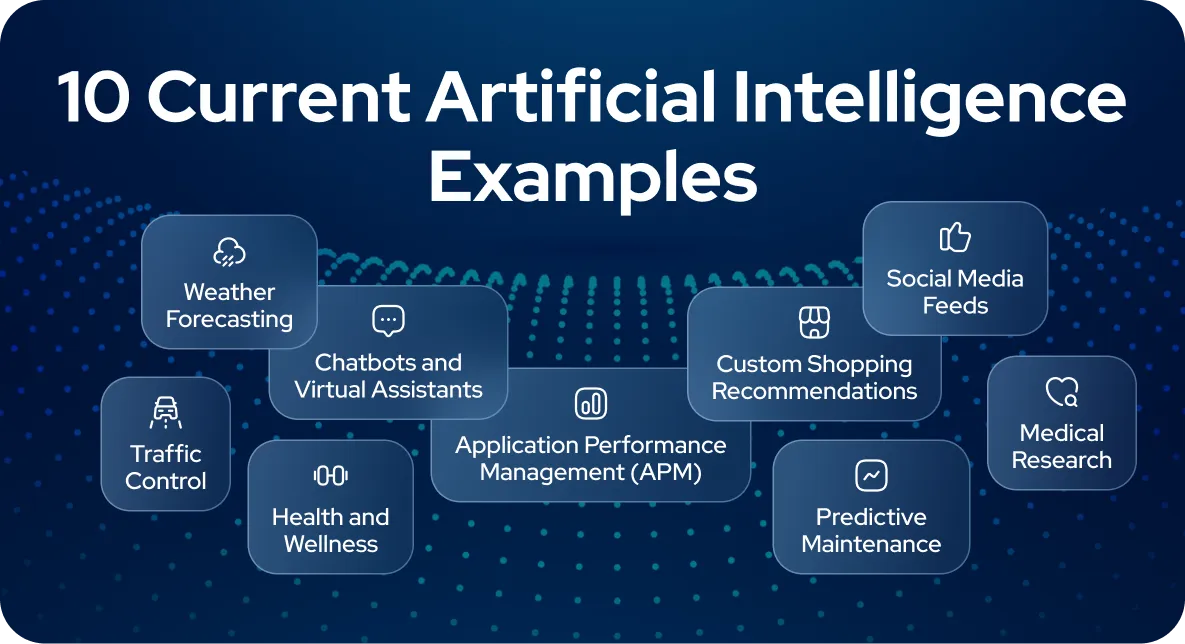

10 Current Artificial Intelligence Examples

Artificial intelligence serves many different purposes depending on the industry. From chatbots to health monitoring to scientific research, AI has proven itself a useful tool that performs tasks in a fraction of the time it would take an entire team of humans. Though not a complete list, the examples below show how organizations apply AI in various ways.

1. Application Performance Management (APM)

Application performance monitoring (APM) involves using software and telemetry data to track how well essential applications perform. AI-powered APM tools analyze past data to forecast problems before they happen. They can also fix issues on the spot by offering actionable suggestions to developers. This approach helps maintain smooth operations and quickly resolves performance slowdowns.

For instance, Asana develops tools that support collaboration and project management. The company uses AI APM solutions to constantly track application performance, identify possible problems, and rank them by urgency. This setup allows teams to quickly act on machine learning–based suggestions and fix performance drops on the spot.

2. Chatbots and Virtual Assistants

AI chatbots and virtual assistants hold more advanced, human-like conversations by understanding context and producing clear, relevant replies to complex language and customer questions. They perform well in areas like customer service, virtual help, and content creation. Their ability to learn over time helps them adapt and boost performance to improve user satisfaction and operational efficiency.

For example, Webull, a global online broker, struggled with managing large volumes of data spread across different platforms. To address this issue, the company deployed an AI-powered assistant that could gather and process information from all departments, including customer support, marketing, and recruitment. By using AI, Webull cut onboarding time for new employees by 43% and reduced time spent on recruitment tasks by 47%.

3. Custom Shopping Recommendations

Online retailers use AI to personalize your shopping experience. By examining your browsing behavior, past purchases, and how long you view certain items, AI recommends products that fit your interests. This feature helps you find what you need faster and introduces you to new options you might like.

4. Health and Wellness

In healthcare, AI supports diagnosis, therapy planning, and patient tracking. For instance, AI tools examine medical scans to spot early indicators of illnesses such as cancer. These systems combine data from wearable devices, health records, and genetic background to help physicians plan treatments for long-term conditions.

5. Intelligent Document Processing (IDP)

Intelligent document processing turns unstructured data into organized, usable data. For example, it organizes emails, scanned images, and PDFs into structured content. IDP relies on AI tools such as natural language processing, deep learning, and computer vision to extract, sort, and verify information.

For example, the Bureau of Land Management, which handles federal property titles in the United States, processes complex legal documents for property transactions. To speed up this task, the bureau implemented an AI tool that automates document comparison. AI reduced review time by 44% and significantly accelerated the property transfer approval process.

6. Medical Research

Medical research applies AI to simplify workflows, execute repetitive duties, and analyze large volumes of data. Researchers use AI to support every stage of drug discovery and development, convert medical notes into digital records, and shorten the time needed to bring new treatments to market.

As a real-world example, Illumina uses AI to power large-scale, flexible genomic workflows and clinical analyses. By taking care of the computational workload, AI allows researchers to concentrate on clinical outcomes and refining methods. Engineering teams also apply AI to slash resource usage, system upkeep, and expenses.

7. Predictive Maintenance

AI-powered predictive maintenance analyzes large datasets to detect issues that could cause system failures and service interruptions. The technology fixes potential problems in advance to minimize downtime and avoid costly disruptions.

For example, 3M runs over 200 international manufacturing facilities and operates around the clock to supply medical technology. The company uses predictive maintenance to automatically spot irregularities in industrial machinery. When automatically alerted, teams can take early action, minimize downtime, and boost overall efficiency.

8. Traffic Control

AI systems process live geospatial data to forecast traffic flow, improve route planning, and recommend detours during heavy congestion. The technology helps drivers reach their destinations more quickly while reducing fuel use and emissions, supporting a more eco-friendly commute.

9. Social Media Feeds

AI controls your favorite streaming services. These platforms use AI algorithms to study your watching and listening habits and suggest content that matches your tastes. The system factors in your social media viewing history, popular titles, and patterns from similar users to keep your feed filled with relevant, engaging options.

10. Weather Forecasting

AI forecasting involves predicting upcoming events and trends by analyzing past data. For instance, weather prediction systems use AI to forecast conditions and help people prepare for storms and other inclement weather.

Artificial Intelligence Modalities

AI is available to consumers in different modalities. When an AI system incorporates several different modalities, we call it “multimodal AI.” Multimodal AI combines disparate data types, such as text, images, audio, and video, to interpret complex information for advanced applications like self-driving cars. Key capabilities include text-to-image generation for creating visuals from prompts, text-to-speech for producing human-like speech, and text generation for crafting written content. Let’s explore each modality together.

Multimodal AI

Multimodal AI merges various types of data, such as text, visuals, and audio, to build a fuller understanding of information. For example, a multimodal system might process a video by recognizing spoken language, identifying visual objects, and reading on-screen text. This advanced approach orchestrates self-driving cars, where interpreting multiple data sources at once is necessary to make safe decisions.

Text-to-Image Generation

Image generation uses AI to produce new visuals based on written prompts. For instance, given a phrase like “a sunrise over the ocean,” the system can create a realistic or stylized image of that scene. This technology supports fields like art, media, and advertising by helping creators bring ideas to life quickly and with minimal effort.

Text-to-Speech Generation

Speech generation permits AI to produce human-like spoken responses, similar to how virtual assistants like Siri interact with users. Speech recognition allows AI to interpret and process spoken language. The technology powers voice-controlled devices, automated customer support systems, and communication tools that assist individuals with speech or mobility challenges.

Text Generation

Text generation allows AI to produce written content that resembles human writing. It can generate anything from basic sentences to full articles, stories, and poems. This capability sees use in chatbots, automated content creation, and tasks like drafting emails and generating reports.

Strong AI vs. Weak AI

To explain how AI operates when dealing with different levels of complexity, researchers have outlined several categories that reflect its level of advancement:

Weak AI, also called “narrow AI,” refers to systems built to handle a single task or a limited group of tasks. Examples include voice assistant apps like Google Assistant, Amazon Alexa, and Apple Siri, chatbots on social media, and self-driving features found in the latest vehicles.

Strong AI, also known as “artificial general intelligence” or “general AI,” refers to systems that can understand, learn, and apply knowledge to a broad range of tasks at a level equal to or beyond human intelligence. This type of AI remains theoretical, with no existing systems reaching this degree of complexity. Many experts believe achieving AGI would require massive leaps in computing power. Despite progress in AI research, self-aware machines seen in science fiction remain purely fictional.

Benefits of Artificial Intelligence

Artificial intelligence increases speed, precision, and efficiency in almost every industry. Its ability to process massive datasets, detect patterns, and make decisions in real time allows AI to take on roles once limited to humans. Beyond supporting human workers, AI systems can function independently, tirelessly, and at scale. Find the top benefits of artificial intelligence below.

Automation

AI can automate workflows and function independently without constant human input. For example, it can tighten up cybersecurity by constantly scanning and analyzing network traffic for threats. In a smart factory, multiple AI systems might work together, such as robots using computer vision to move safely and inspect products for flaws, digital twins simulating real-world processes, and real-time analytics tracking performance.

Reduce Human Error

AI reduces human error by automating data processing, analysis, and manufacturing assembly. By following consistent algorithms and workflows, AI systems perform tasks with precision and repeatability, minimizing mistakes that often result from manual effort.

Eliminate Repetitive Tasks

AI executes repetitive tasks, allowing workers to focus on more meaningful, strategic work. It automates document verification, call transcription, and answering basic customer queries like “what days are you open?” In industrial settings, robots often take over tasks that are dull, hazardous, or physically demanding to improve safety and efficiency.

Fast and Accurate

AI can analyze large volumes of information much faster than a person, spotting patterns and uncovering connections that humans might overlook. This ability makes AI valuable for tasks that involve complex data analysis and decision-making.

Infinite Availability

AI does not care about the time of day, rest periods, or other human needs. When deployed in the cloud, AI and machine learning systems operate continuously, operating around the clock to carry out their assigned functions without interruption.

Accelerated Research and Development

AI’s capacity to rapidly process massive datasets speeds up discoveries in research and development. For example, predictive AI models identify possible new drug treatments and analyze the human genome with greater accuracy and efficiency.

Challenges With Artificial Intelligence

While AI offers amazing benefits, it also introduces a new class of risks that organizations must address to encourage safe, reliable use. From compromised datasets and vulnerable models to ethical pitfalls and operational failures, these threats undermine trust, performance, and compliance. Below, you can find the top four challenges facing AI developers.

Data Risks

AI systems depend on datasets that may introduce risks like data poisoning, tampering, bias, and cyberattacks, which can result in data breaches. To reduce these threats, organizations should preserve data integrity and apply strong security measures at every point in the AI lifecycle, including development, training, deployment, and maintenance.

Ethics and Legal Risks

If organizations overlook safety and ethics in AI development and deployment, they risk breaching privacy and generating biased results. For instance, using skewed training data in hiring tools can reinforce gender and racial stereotypes, leading to models that unfairly favor specific demographic groups.

Model Risks

Malicious actors may attempt to steal, reverse-engineer, and manipulate AI models. They can undermine a model’s integrity by altering its architecture, weights, and parameters, which are critical elements that affect how the model functions, how accurate it is, and how well it performs.

Operational Risks

Like any technology, AI models face operational risks such as model drift, bias, and lapses in oversight. If not properly managed, these issues can cause system failures and create security gaps that attackers may exploit.

History of AI

The concept of a thinking machine traces all the way back to ancient Greek philosophy. With the recent rise of electronic computing, several key events and breakthroughs have guided the development of AI. Let’s review the history of artificial intelligence.

1950

Alan Turing publishes Computing Machinery and Intelligence. In his influential paper, Turing, known for cracking the German ENIGMA code during World War II and widely regarded as the Father of Computer Science, poses the provocative question: Can machines think?

Turing then proposes a method, now known as the “Turing Test,” where a human interrogator attempts to tell the difference between computers’ and humans’ text-based responses. Although the test has faced extensive criticism and debate over the years, it remains a landmark in AI history and continues to influence philosophical discussions, especially those involving language and meaning.

1956

John McCarthy introduces the term “artificial intelligence” at the inaugural AI conference held at Dartmouth College. That same year, Allen Newell, J.C. Shaw, and Herbert Simon build the Logic Theorist, the first functioning AI computer program.

1967

Frank Rosenblatt develops the Mark 1 Perceptron, the first neural network–based computer that learns by trial and error. One year later, Marvin Minsky and Seymour Papert release Perceptrons, a book that becomes a foundational text on neural networks while also discouraging further research in the field.

1980

AI researchers begin widely applying neural networks that train themselves using the backpropagation algorithm.

1995

Stuart Russell and Peter Norvig release Artificial Intelligence: A Modern Approach, which quickly becomes a top textbook in the AI field. In the book, they explore four possible aims or definitions of AI, distinguishing systems by whether they think or act, and whether they do so rationally.

1997

IBM’s Deep Blue defeats reigning world chess champion Garry Kasparov in back-to-back matches.

2004

John McCarthy publishes a paper titled What Is Artificial Intelligence?, where he offers a widely referenced definition of AI. Around this time, the rise of big data and cloud computing begins, allowing organizations to process massive volumes of information that would later train AI systems.

2011

IBM Watson® defeats Jeopardy! champions Ken Jennings and Brad Rutter. Around the same period, data science starts gaining traction as a prominent, in-demand field.

2015

Baidu’s Minwa supercomputer employs a specialized deep neural network known as a convolutional neural network to recognize and classify images more accurately than the average person.

2016

DeepMind’s AlphaGo, driven by a deep neural network, defeats world Go champion Lee Sedol in a five-game series. The win is notable due to the game’s vast complexity, with more than 14.5 trillion possible move combinations after just four turns. Google later acquires DeepMind for an estimated $400 million.

2022

The emergence of large language models, like OpenAI’s ChatGPT, marks a major leap in AI’s ability to generate business value. These new generative AI methods allow deep learning models to pretrain on massive datasets, greatly improving performance and versatility.

2024

Current AI trends show a continued surge in innovation. Multimodal models that process multiple data types now deliver more immersive experiences by combining image recognition from computer vision with speech and text understanding from NLP. At the same time, smaller models are advancing rapidly, offering strong performance as returns diminish when scaling up massive models with billions of parameters.

Experience the Latest AI Technologies From AiFA Labs

AiFA Labs stands at the forefront of AI innovation by delivering four cutting-edge products:

- SASA — Automate your SAP software development lifecycles and migrations

- Agentic AI (AIOps) — Deploy an army of well-trained AI agents to execute for you

- Edge Vision AI Platform (ViSRUPT) — An all-seeing AI camera system for crowd control, quality assurance, equipment maintenance, and more

- Cerebro Generative AI Platform — Launches AI projects that comport with the current rules

Book a free online demonstration of some or all of these products or call AiFA Labs at (469) 864-6370 today!