Large language models (LLMs) have placed AI on the global stage, but they represent only the starting point. The true technological breakthrough occurs when LLMs become AI agents. These smart, purposeful enterprise systems are autonomous agents capable of reasoning, making choices, and acting on their own. LLM agents speed up the creation of AI tools by powering automated research bots and agentic AI systems that handle multi-step tasks.

Let’s explore what LLM agents are, how they function, their various types, practical applications, and the hurdles they face. Whether you work as a developer, entrepreneur, or AI hobbyist, this comprehensive guide will give you a clear view of what intelligent agents will become.

What Is an LLM Agent?

LLM agents are AI systems that rely on large language models to understand language, engage in dialogue, and complete specialized tasks.

Software developers build LLM agents using advanced algorithms trained on massive text datasets, allowing them to process and generate language with above-average human fluency.

You can embed LLM agents into AI chatbots, virtual assistants, content creation platforms, and a wide range of practical applications.

These agents can strategize, analyze, utilize external tools, retain information, and function independently to solve complex tasks. Simply put, they convert passive LLMs into purposeful, autonomous AI systems.

Unlike a typical LLM, such as OpenAI GPT-4.5 or Claude Sonnet 4, which answers one prompt at a time, an LLM agent follows an iterative process to meet specific goals. It assesses the user’s stated objective, determines the next move, performs actions, examines the outcome, and repeats the cycle until it reaches the target.

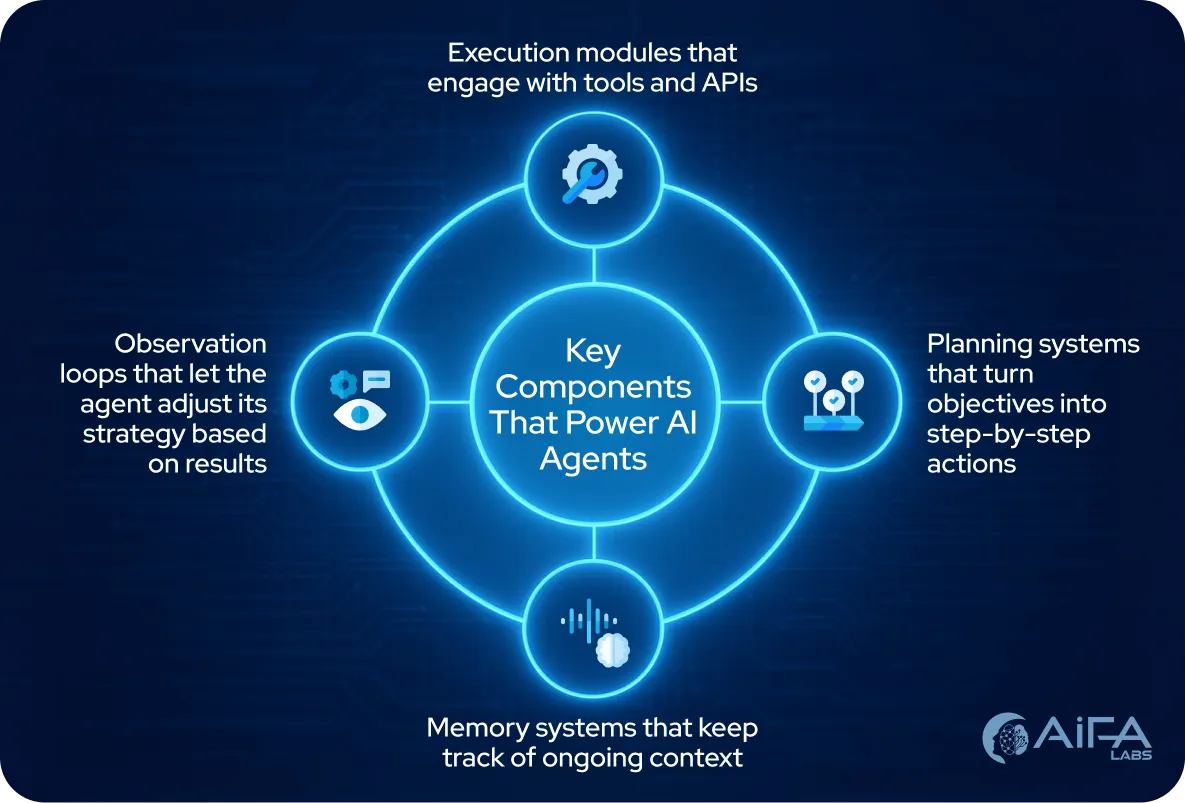

Agents do what they do by layering several components around the core language model, including:

- Planning systems that turn objectives into step-by-step actions

- Execution modules that engage with tools and APIs

- Memory systems that keep track of ongoing context

- Observation loops that let the agent adjust its strategy based on results

LLM Agent Architecture

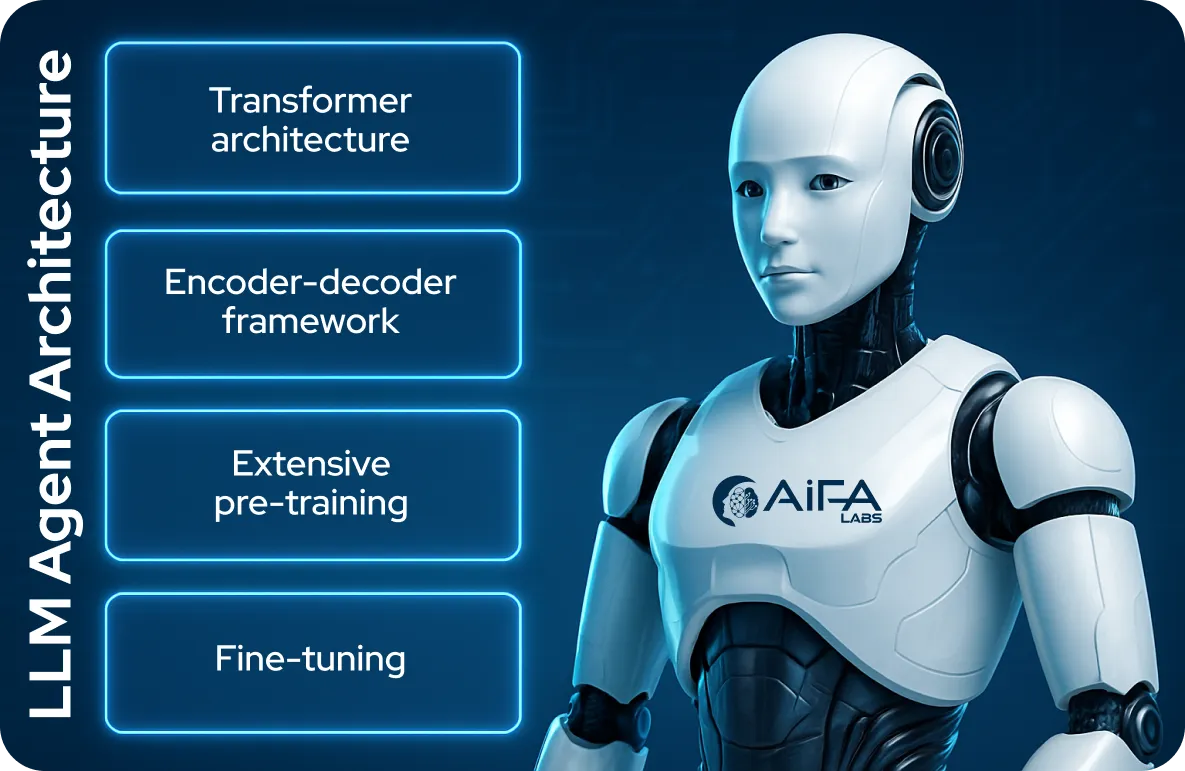

The structure of large language model agents relies on neural networks, particularly deep learning systems built to manage language-related tasks.

The main parts of an LLM agent’s framework include:

- Transformer architecture – Transformers apply self-attention to weigh the significance of each word in a sentence and use multi-head attention to focus on the sentence’s various parts simultaneously. They also add positional encodings to input embeddings so the model can recognize word order within the text.

- Encoder-decoder framework – The encoder analyzes the input text, and the decoder produces the output. Some models rely solely on the encoder or the decoder, while others utilize both components for full input-output processing.

- Extensive pre-training – Developers train models on massive collections of text from books, websites, and other sources. Pre-training allows the model to grasp language structure, learn factual information, and build a broad knowledge base.

- Fine-tuning – After the initial pre-training, developers refine the models using specialized datasets tailored to specific fields. This process boosts the accuracy and effectiveness of LLMs in performing their assigned tasks.

Now that we understand the architecture of LLM agents, let’s look at their main components.

LLM Agent Core Components and Framework

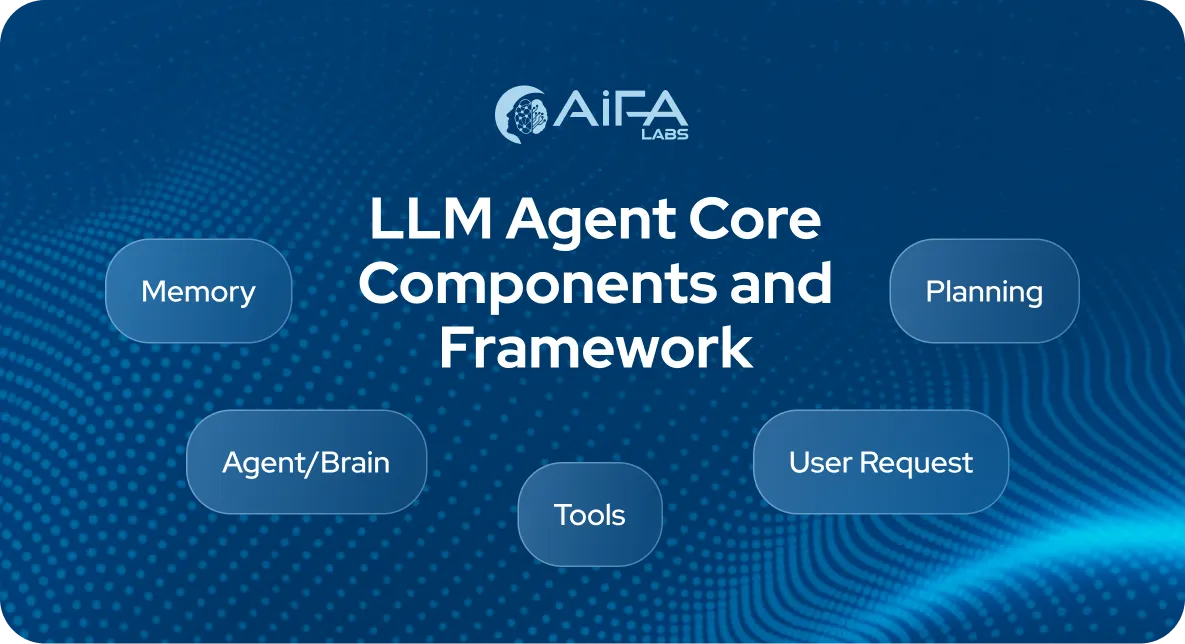

An LLM agent framework usually consists of the following five core components:

- Agent/Brain — The agent core serving like an orchestra conductor

- Memory — Organizes the agent’s previous outputs and behaviors

- Planning — Helps the agent in plan future tasks

- Tools — Integrate with the agent to perform specific tasks

- User Request — A user query or directive

Let’s explore each component individually.

Agent/Brain

At the heart of an LLM agent lies a language model that comprehends and interprets text using extensive training data.

When you interact with an LLM agent, you begin by providing a clear prompt. This AI prompt instructs the agent how to reply, which tools to activate, and what objectives to pursue.

You can also customize an agent by assigning it a defined persona. The process involves configuring the agent with specific traits and areas of knowledge, making it more effective for certain tasks and conversations.

At its core, an LLM agent merges powerful processing capabilities with flexible settings, allowing it to manage and adjust to any task or conversation.

Memory

An LLM memory module stores the agent’s internal logs, including past conversations, thoughts, actions, and environmental feedback, as well as all exchanges between the user and the agent. Researchers have identified the following two primary types of memory in LLM agents:

- Short-term memory — Memory limited by a constrained context that holds contextual details about the agent’s present state through in-context learning

- Long-term memory — Stores the agent’s conversation history, past interactions, and reflections for retrieval-augmented generation (RAG) using an external vector database, enabling quick and scalable access to relevant data whenever the agent requires it

Hybrid memory combines short-term and long-term memory to augment the agent’s capacity for extended reasoning and experience retention.

Developers can also choose from various memory formats when designing agents. Common formats include plain language, vector embeddings, structured databases, and organized lists. Developers can blend these formats so keys are written in natural language and values are stored as embedding vectors.

The planning and memory modules work together to let the agent function in changing environments, helping it remember previous actions and strategize for upcoming steps.

Planning

With a planning module, LLM agents can think logically, divide complex tasks into simpler steps, and create targeted strategies for each. As tasks shift, agents can review and revise their plans to stay in tune with new demands.

Planning usually includes two core phases: strategy creation and plan evaluation.

Planning Formulation

In this phase, agents divide a complex task into smaller, manageable subtasks. Some task-splitting strategies build a complete plan upfront and execute it step by step. The Chain of Thought (CoT) method supports a more flexible approach where agents handle one sub-task at a time, adapting as they go. The Tree of Thought (ToT) method expands on CoT by mapping out multiple solution paths. It breaks the challenge into sequential steps, produces several options at each stage, and organizes them like branches on a tree.

Some methods follow a layered approach or arrange plans in a decision-tree format, examining all potential choices before settling on a final strategy. Although LLM-based agents typically possess broad knowledge, they face challenges with tasks that demand expert-level understanding. Pairing these agents with domain-specific planning systems increases their accuracy and effectiveness.

Planning Reflection

Once agents build a plan, they must evaluate and refine its effectiveness. LLM agents use internal review systems, relying on pre-trained models to improve their approaches. They also incorporate human input, adjusting strategies based on feedback and preferences. Additionally, agents observe their physical and digital surroundings to further optimize their plans.

ReAct and Reflexion are two powerful techniques agents use to integrate feedback into their planning process.

ReAct permits an LLM to tackle complex problems by looping through thinking, acting, and observing in sequence. It processes environmental feedback, including observations, human input, and responses from other models. This approach lets the LLM refine its strategy on the fly, boosting its accuracy and responsiveness when solving problems.

Tools

In this context, tools refer to a range of external resources that LLM agents interact with to complete specific tasks. These activities may involve pulling data from databases, running queries, or writing code. When an LLM agent uses these tools, it follows predefined procedures to execute tasks, capture observations, and retrieve the data required to finish subtasks and meet user demands.

Here are a few examples showing how various systems incorporate these tools:

- Modular Reasoning, Knowledge, and Language (MRKL) — This framework combines specialized modules from advanced neural networks with basic tools like calculators and GPS. The central LLM functions as a dispatcher, routing each query to the most suitable expert module based on the task at hand.

- Toolformer and Tool-Augmented Language Models (TALM) — Developers fine-tune these models to work with external APIs. For example, they can train the model to access a financial API and analyze stock market trends or forecast currency shifts, allowing it to deliver real-time financial data and insights to users.

- HuggingGPT — This system uses ChatGPT to coordinate tasks by choosing the most suitable models from the HuggingFace library for each request, executing those tasks, and then compiling the results into a summary.

- API-Bank — This benchmark evaluates how effectively LLMs use 53 widely used APIs to complete tasks such as appointment scheduling, managing health records, and controlling smart home devices.

- Function Calling — This technique permits LLMs to use tools, which involves specifying a set of API functions and including them in the model’s input request.

Now that we have a basic understanding of how LLM agents use tools, let’s examine the final component: user requests.

User Request

User requests serve as the primary input that drives LLM agent behavior. These requests define the objective, scope, and context of each task, helping the agent interpret, plan, and act. Whether simple or complex, user inputs guide the agent’s decision-making, tool use, and response generation. By continuously analyzing and adapting to user queries, LLM agents maintain alignment with human intent, making interactions purposeful, efficient, and contextually relevant.

Common Types of LLM Agents

There are several different types of LLM agents, including single-action LLM agents, multi-agent systems, react-agent LLMs, and many others. Let’s explore the most common types of LLM agents in turn.

Single-Action LLM Agents

Task-Oriented Agents

Developers create task-oriented agents to handle defined duties, such as responding to inquiries, booking appointments, and offering customer service.

Conversational Agents

Conversational agents facilitate back-and-forth interactions with users and are commonly linked to chatbots, among other use cases.

Multi-Agent LLMs

Collaborative Agents

Multi-agent systems coordinate and cooperate to reach a shared objective, particularly when solving complicated problems.

Competitive Agents

In contrast to multi-agent collaboration, competitive agents operate in rival settings to simulate real-world challenges and train models to adapt to actual market dynamics.

React-Agent LLMs

Event-Driven Agents

Event-driven agents respond to specific triggers and environmental changes, like live alerts and system notifications.

Rules-Based Agents

Rules-based agents activate when preset rules or conditions are met and are frequently used in surveillance and tracking systems.

Proactive LLM Agents

Predictive Agents

Predictive agents forecast user needs and upcoming events by examining past behavior and identifying trends.

Preventive Agents

Preventive agents detect possible issues in advance by studying patterns and applying solutions before problems arise.

Interactive LLM Agents

Question-Answering Agents

Question-answering agents reply to questions by using model context protocol, often pulling from knowledge sources.

Advisory Agents

Advisory agents provide suggestions and guidance by evaluating user habits, needs, and preferences.

Backend Integration Agents

SQL Agent LLMs

SQL agents communicate with databases to run SQL commands, fetch records, and handle data management tasks.

API Agents

API agents connect with application programming interfaces to collect information and initiate operations within external systems.

Domain-Specific LLM Agents

Educational Agents

Educational support learning by delivering lessons, offering tutoring, and supplying personalized educational materials.

Healthcare Agents

Healthcare agents manage responsibilities involving patient data, medical records, and clinical communication.

Autonomous LLM Agents

Self-Learning Agents

Self-learning agents expand their capabilities over time by absorbing feedback and applying reinforcement learning methods.

Self-Repairing Agents

Self-repairing agents detect issues in their own processes and independently correct errors without external intervention.

Hybrid LLM Agents

Multi-Functional Agents

Multi-functional agents blend various abilities to deliver more comprehensive solutions. For example, a chatbot may also process merchandise returns.

Context-Aware Agents

Context-aware agents adjust their actions based on surrounding conditions, allowing for greater adaptability and responsiveness.

How Do LLM Agents Work?

LLM agents can perform a variety of tasks, including:

- Answering questions with improved accuracy and relevance

- Summarizing content by keeping only key points

- Translating text while preserving context and subtle meanings

- Detecting sentiment for tasks like social media tracking and analysis

- Generating content that is original, engaging, and suited to specific needs

- Extracting data such as names, dates, events, or places

- Writing code, fixing bugs, or even building full applications

They accomplish these tasks using two foundational technologies:

- Natural Language Understanding (NLU) — Helps LLM agents grasp human language, recognize intent, context, emotion, and subtle cues

- Natural Language Generation (NLG) — Allows LLM agents to produce fluent, context-aware, and meaningful text

What sets LLM agents apart is their ability to generalize from massive datasets. This strength enables them to handle various tasks with impressive precision. Additionally, developers can customize and fine-tune them for targeted applications in customer service, finance, and healthcare.

How to Assess AI Large Language Model Agents

Just like assessing LLMs, evaluating language agents poses significant challenges. Common evaluation approaches include:

- Benchmarks – A range of dedicated benchmarks to test and measure LLM agent capabilities

- Human Annotation – Involves human reviewers who directly score agent outputs based on critical criteria such as truthfulness, usefulness, engagement, and fairness

- Metrics – Thoughtfully crafted measurements used to assess agent quality, including task completion rates, human-likeness scores, and performance efficiency

- Protocols – Standard evaluation procedures that guide how metrics are applied, such as real-world simulations, social interaction testing, multi-task performance, and software validation

- Turing Test – Requires human judges to compare responses from humans and agents; if evaluators can not tell them apart, the agent demonstrates human-level performance

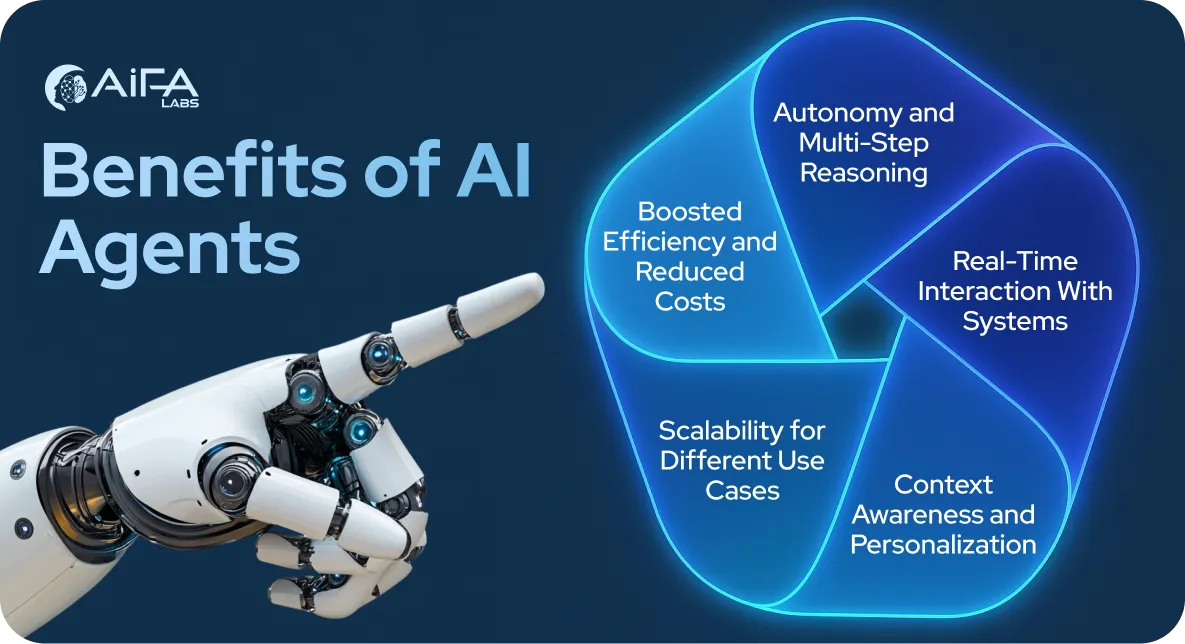

Benefits of AI Agents

LLM agents represent a significant advancement in applying AI to real-world tasks. By blending the cognitive strength of large language models with memory, strategic planning, and tool integration, these agents evolve from passive assistants into self-directed collaborators. This change in structure brings practical advantages in technical fields and business operations.

Autonomy and Multi-Step Reasoning

Unlike standard LLMs that handle one prompt at a time, agents tackle complicated workflows by dividing up tasks, using external AI tools, and repeating steps until completion. This independence allows them to autonomously carry out multi-stage business operations, such as examining data, extracting insights, creating a presentation, and sending a final report.

Real-Time Interaction With Systems

By integrating with external tools, agents retrieve real-time data, communicate with APIs, and modify files and databases directly. This capability to pull fresh information overcomes the static knowledge limits of pre-trained models. For organizations, it means agents can connect with CRMs, analytics platforms, scheduling systems, and internal software.

Context Awareness and Personalization

Memory systems allow LLM applications to retain context during conversations. This technology lets them recall user preferences, follow previous actions, and tailor contextually relevant responses. As they interact more, agents adjust their language, content, and suggestions based on observed user patterns, creating a more natural, personalized experience.

Scalability for Different Use Cases

LLM-powered agents demonstrate impressive flexibility. Teams can repurpose the same core agent for different functions, such as sales, marketing, and finance, by adjusting the LLM agent’s workflow strategies and tool use. This modular setup speeds up deployment and minimizes repetitive development work.

Boosted Efficiency and Reduced Costs

By taking over routine, data-driven tasks, LLM apps reduce the workload on human teams. This efficiency boost allows staff to concentrate on strategic planning and critical decisions while agents manage day-to-day operations, resulting in noticeable gains in productivity and lower operational expenses.

Challenges With AI Agents

LLM agents showcase advanced capabilities, but their sophistication presents a range of technical and operational hurdles. Ensuring precise decisions and dependable performance demands more than just linking an LLM to a prompt cycle. Creating stable agents involves addressing multiple challenges. Some common challenges include:

- Broad Human Alignment — Aligning agents with diverse human values remains a tough challenge. One possible approach to address this issue involves reconfiguring the LLM using sophisticated prompt engineering techniques.

- Efficiency — LLM agents generate a high volume of requests processed by the language model, which can slow down the agent’s responsiveness due to its reliance on inference speed. Running multiple agents also raises cost concerns, making scalability and resource management critical factors in deployment.

- Knowledge Boundaries — Like knowledge mismatches that trigger hallucinations and factual errors, managing the scope of an LLM’s knowledge proves difficult and can affect simulation accuracy. An LLM’s built-in knowledge may introduce bias or rely on information unknown to the user, influencing the agent’s behavior.

- Long-Term Planning and Limited Context Windows — Managing plans over extended timelines remains difficult and can result in mistakes the agent may fail to correct. LLMs also face restrictions in the amount of context they can process, which can hinder the agent’s ability to fully utilize short-term memory and reduce its overall effectiveness.

- Prompt Stability and Dependability — LLM agents often rely on multiple prompts to power various components, like memory, planning, and decision-making. Even small changes to these prompts can cause reliability problems, a known issue with standard LLMs. Since agents use an entire prompt framework, they face an even greater risk of instability. Potential fixes include refining prompts through experimentation, auto-tuning prompts for better performance, and generating them dynamically with models like GPT. Because these agents use natural language to interact with external systems, they may receive conflicting inputs, leading to inaccurate outputs and factual inconsistencies.

- Role-Playing Ability — LLM agents usually need to adopt a specific role to perform tasks effectively within a given field. If a model struggles to represent certain roles accurately, developers can fine-tune it using datasets that reflect specific personas and psychological profiles.

Once developers overcome these challenges, LLM-based agents will start to perform even better than they already do.

AiFA Labs | Custom AI Agents for Your Industry

What would it mean for your company if you could automate routine tasks with LLM agents and reassign existing personnel to more meaningful duties? Would you have a more profitable business and a happier, more productive workforce? At AiFA Labs, we deliver cutting-edge LLM agents custom-tailored for specific tasks within your industry. To discuss your immediate needs and see how we can help, book a free demo online or call AiFA Labs at (469) 864-6370 today.

FAQ

LLM agents are intelligent systems that utilize large language models trained on massive text datasets to comprehend, mimic, and produce human-like language. These agents apply LLMs to handle language-based tasks for better decision-making and realistic interactions between users and systems.

An SAP AI agent is an application powered by artificial intelligence that independently makes choices and carries out tasks with little human input. Supported by sophisticated models, these SAP AI agents can decide on a course of action and use various software tools to complete their objectives in SAP environments.

An LLM is used for natural language processing (NLP) and data analysis tasks. Through machine learning and deep learning, it is adept at translating text, categorizing content, generating written responses, answering questions conversationally, and recognizing data patterns.

An LLM really is AI. It is a form of artificial intelligence system designed to understand and produce text, among other capabilities. Developers train LLMs on massive volumes of data. These models rely on machine learning techniques, particularly a kind of neural network known as a transformer architecture.