Can a machine perceive its surroundings, interpret what it observes, and respond with purposeful action? With computer vision powered by artificial intelligence, modern computing, and imaging technologies, it can. Discover how computer vision systems work and how businesses apply them to spark innovation, encourage automation, boost efficiency, and increase value.

Core Highlights

- Computer vision applications analyze digital images to boost security systems, automate business processes, operate self-driving cars, and spark innovations in agriculture, healthcare, sports, and manufacturing.

- Current computer vision trends emphasize edge AI, real-time video analytics, model optimization, and specialized hardware accelerators that power affordable on-device deployment.

- Computer vision software speeds up decision-making, improves accuracy and safety, automates risky tasks, delivers visual business insights, and requires substantial technical resources and data governance.

What Is Computer Vision?

Computer vision is a branch of artificial intelligence that teaches machines to replicate how humans perceive, interpret, and respond to visual information. By applying computer vision technology, organizations can unlock more efficient workflows, heightened operational performance, better customer interactions, and bigger competitive advantages.

Computer vision frequently handles tasks that are slow, error-prone, or nearly unachievable for people, such as spotting and classifying defects and irregularities, monitoring machinery conditions, performing automated reviews of medical images, and detecting illnesses. To manage these activities, organizations must track operations, processes, and other areas of the business, generating massive volumes of image data. They then need to extract insights instantly and act on them decisively.

How Does Computer Vision Work?

Computer vision works by allowing machines to study and interpret visual information in a similar way to how the human eye and brain function. Computer vision systems rely on cameras, sensors, and sophisticated algorithms that undergo training with vast collections of images and visual datasets.

This form of AI boosts productivity, fosters innovation, and supports automation in healthcare, security, manufacturing, retail, and self-driving technologies.

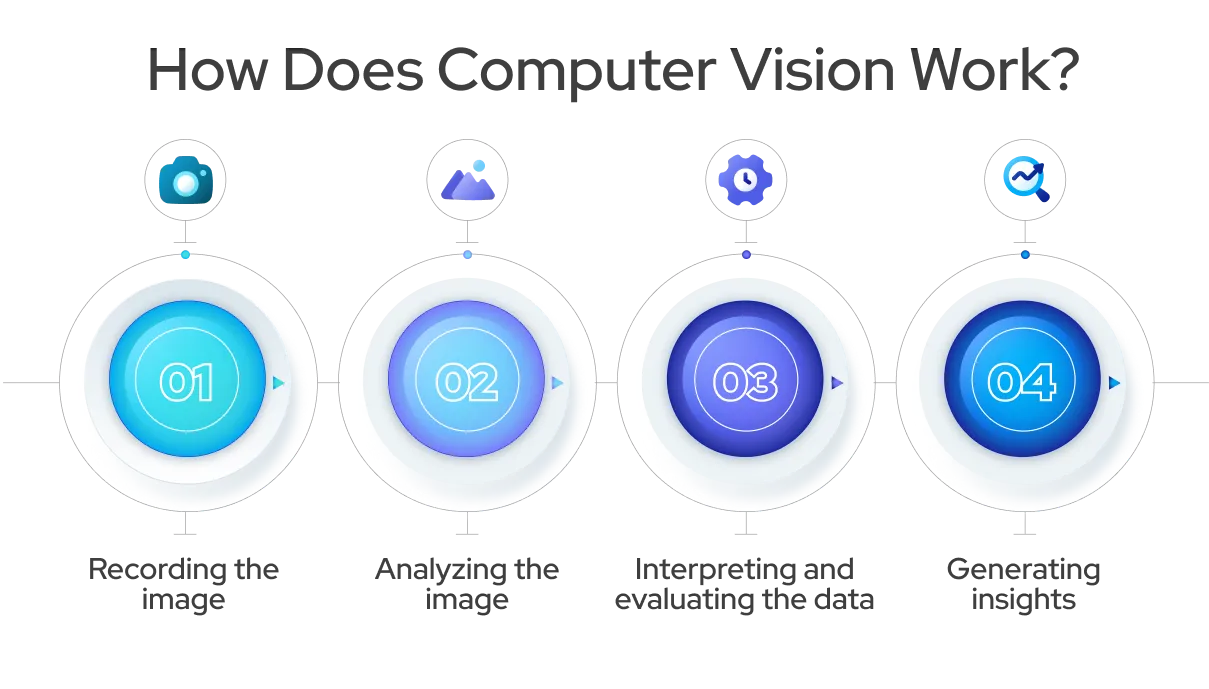

The four fundamental phases in image evaluation involve:

- Recording the image — Tools such as cameras, drones, and medical scanners capture a photo or video, supplying the raw visual data for AI systems to process and interpret.

- Analyzing the image — An AI system processes the captured visuals using computer vision algorithms to identify and recognize patterns. It examines the visual input and compares it with an extensive database of stored references, which may contain objects, faces, or even medical scans.

- Interpreting and evaluating the data — After the system detects patterns, it decides what the image contains. The process may involve identifying machinery in a factory, recognizing individuals in surveillance footage, or detecting possible medical conditions in diagnostic scans.

Generating insights — The system delivers conclusions drawn from its image evaluation. These findings guide the choices and actions the system suggests. For instance, it may flag a defect on a production line, identify unauthorized entry in a facility, or track customer behavior in a retail space.

What Is Computer Vision Used for?

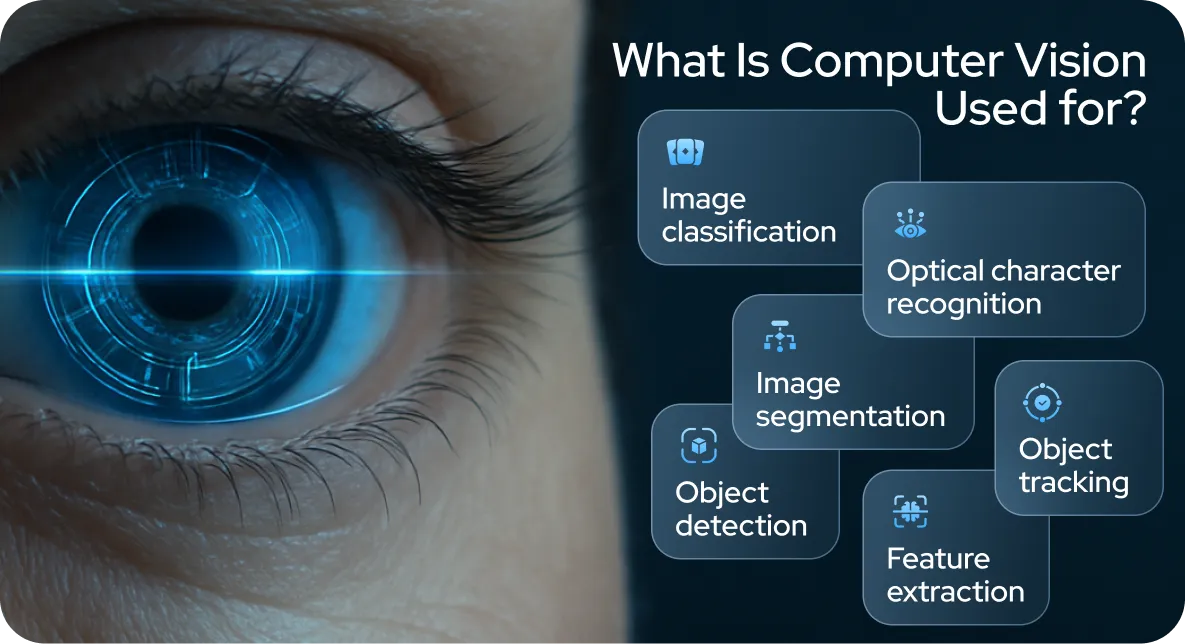

Computer vision uses machine learning and deep learning algorithms to train systems to identify elements in images and videos in order to generate predictions about them. Categories that involve training computer vision models include:

- Image classification — Examining an image and assigning it a category label according to its contents. For instance, an image classification algorithm can identify whether a picture includes a bird, a cat, or even a disgruntled employee.

- Image segmentation — Detecting objects and separating them from their surroundings. For example, an image segmentation model can isolate a tumor from nearby lung tissue in X-ray scans.

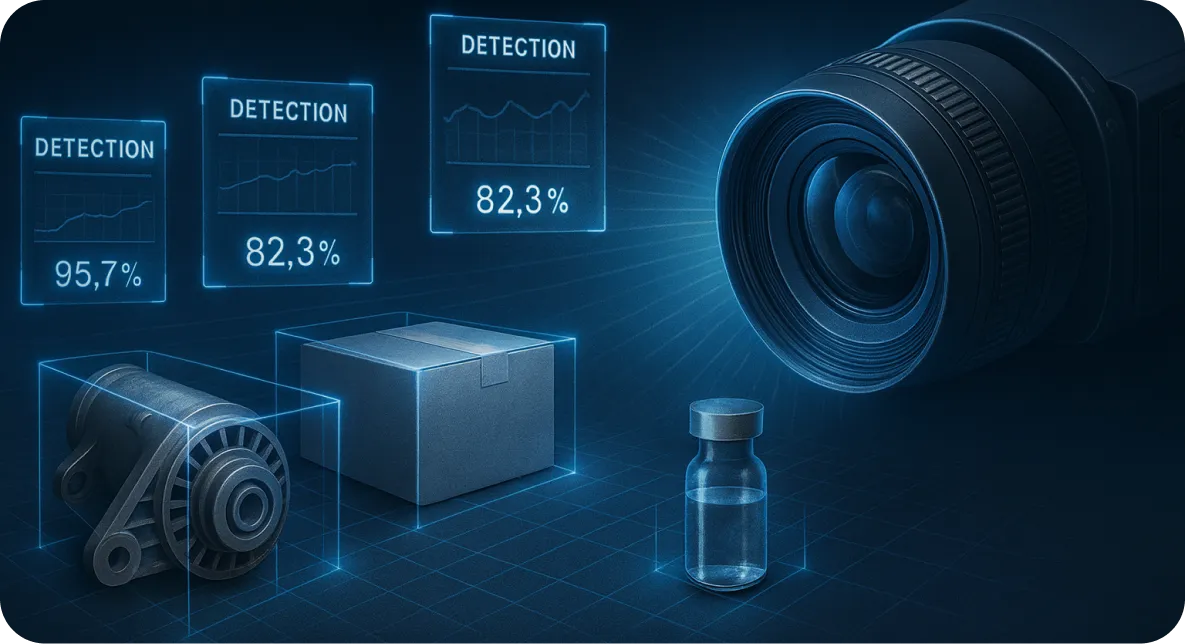

- Object detection — Scanning images and videos to locate target items. These models often mark several objects at once while spotting products on store shelves for better inventory control or detecting defects on an assembly line.

- Object tracking — Following the movement of identified objects as they move through a setting. For instance, autonomous vehicles use object recognition to monitor pedestrians walking on sidewalks and crosswalks.

- Feature extraction — Isolating important traits within an image or video and transferring them to another AI model for further analysis, such as image search and retrieval. For example, feature extraction can automate traffic surveillance and detect collisions on the road.

- Optical character recognition (OCR) — Extracting text from an image and converting it into a machine-readable format. Industries like insurance and healthcare often use this technology to process critical paperwork and patient medical records.

Computer Vision Use Cases

Computer vision has evolved from a research concept into a practical tool that augments industries and daily life. By enabling machines to interpret and analyze visual data, it powers computer vision applications that range from safety monitoring to advanced healthcare diagnostics. Businesses, governments, and individuals benefit from its ability to detect risks, automate processes, and deliver insights in real time. The following sections highlight some of the most impactful use cases of computer vision.

Agriculture

Computer vision applications strengthen the agricultural industry by increasing output and lowering expenses through smart automation. Satellite imagery and drone footage allow farmers to study large areas of land and refine cultivation techniques. Computer vision tools automate essential duties such as tracking field conditions, detecting plant diseases, measuring soil moisture, and forecasting weather and harvest yields. Farmers also rely on computer vision for livestock supervision, making it a vital part of precision farming.

Healthcare

The healthcare sector ranks among the top industries adopting computer vision AI technology. Medical imaging analysis, in particular, generates detailed visualizations of organs and tissues, helping doctors deliver faster, more precise diagnoses that improve treatment success and life expectancy. Examples include:

- Automated interpretation of X-ray scans

- Diagnoses through MRI image analysis

- Tumor identification by examining skin growths and abnormal moles

Productivity

Computer vision studies images and pulls metadata for business insights, boosting revenue potential and streamlining operations. For instance, it can:

- Detect equipment maintenance needs and safety hazards

- Examine social media visuals to uncover customer behavior patterns and trends

- Spot quality flaws automatically before goods leave the plant

- Verify employees through automated facial recognition

Security

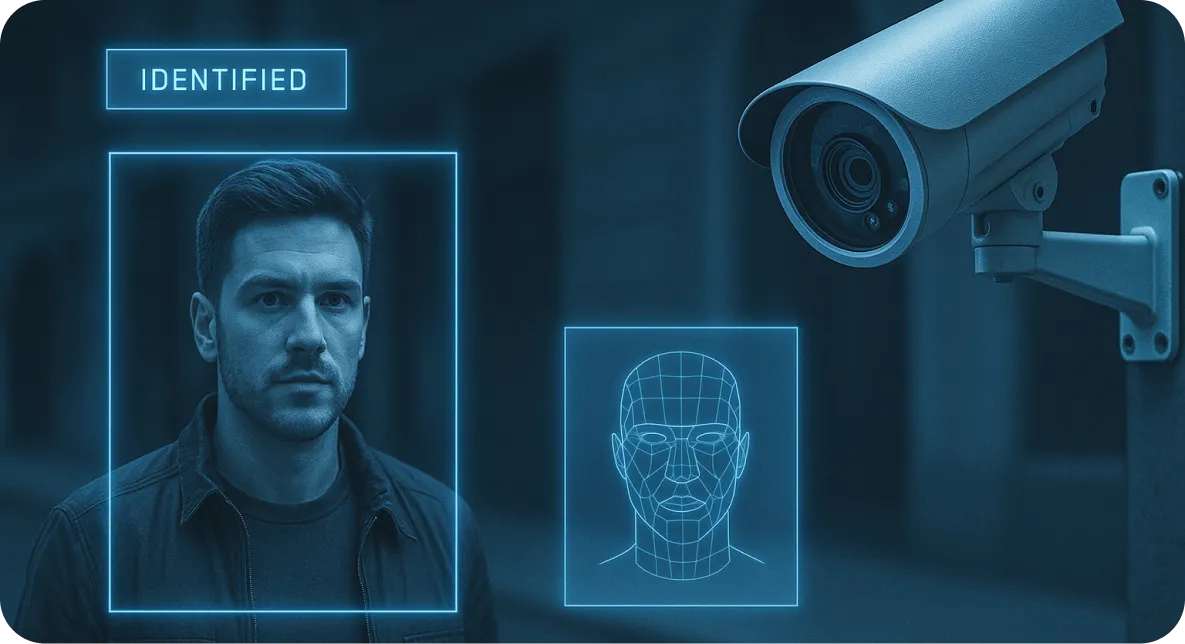

Governments and businesses apply computer vision to strengthen the protection of properties, store locations, and critical facilities. For instance, cameras and sensors oversee public areas, industrial zones, and highly secure sites. They trigger instant alerts when unusual activity occurs, such as an unauthorized person entering a restricted zone.

In the same way, computer vision augments personal protection at home and in public. For example, recognition systems can track several safety-related concerns simultaneously, including home live feeds monitoring pets and door cameras detecting visitors and package deliveries. In work environments, this monitoring involves verifying that employees wear proper personal protective equipment, activating warning systems, and producing compliance reports.

Self-Driving Vehicles

Autonomous driving systems rely on computer vision to interpret live images and generate 3D maps using multiple cameras installed on the vehicle. They process visuals to detect other drivers, traffic signs, pedestrians, and obstacles.

In partially autonomous cars, computer vision applies machine learning (ML) to track driver behavior. It detects indicators of distraction, tiredness, and drowsiness by analyzing the driver’s head posture, eye movements, and upper body actions. When the system identifies warning cues, it issues alerts to the driver and lowers the risk of accidents.

Sports

Computer vision systems support sports performance and analytics. Coaches and athletes apply computer vision to monitor player motion, evaluate game tactics, and deliver real-time feedback that sharpens performance.

Computer vision applications automate sports manufacturing and inspection. Computer vision enforces quality control by scanning products on production lines, spotting flaws, and confirming proper packaging. It also tracks equipment performance for predictive maintenance.

Contemporary Trends in Computer Vision

Emerging trends integrate edge computing with on-device machine learning, a technique often referred to as edge AI. Shifting AI processing from the cloud to local devices allows computer vision models to operate anywhere and supports the development of scalable applications.

There is a downward trend in computer vision expenses, fueled by gains in processing efficiency, declining hardware prices, and emerging innovations. As a result, many computer vision applications have become practical and cost-effective, speeding up adoption.

The most significant computer vision developments today include:

- AI Model Refinement and Deployment

- Edge Vision Systems

- Hardware-Based AI Accelerators

- Real-Time Video Processing

1. AI Model Optimization and Deployment

Following a decade of deep learning training that focused on boosting accuracy and performance, computer visioning has now entered the phase of large-scale deployment. Advances in AI model optimization and the creation of new architectures have significantly reduced the size of machine learning models while increasing computational efficiency. These improvements enable deep learning computer vision systems to operate without relying on costly, power-hungry AI hardware and GPUs in centralized data centers.

2. Edge Computer Vision

In the past, organizations relied on pure cloud solutions for computer vision and AI due to their virtually unlimited computing resources and the ability to scale resources quickly. Web and cloud-based computer vision systems require sending all photos and images to the cloud, either directly or through a computer vision API like AWS Rekognition, Google Vision API, Microsoft Azure Cognitive Services, or the Clarifai API.

In mission-critical scenarios, organizations usually cannot offload data through a centralized cloud framework due to technical issues like latency, bandwidth, connectivity, and redundancy, privacy concerns such as confidential data, legality, and security, or excessive costs associated with real-time processing, large-scale operations, and high-resolution loads that trigger bottleneck expenses. As a result, companies and institutions adopt edge computing strategies to bypass cloud limitations by extending the cloud between multiple interconnected edge devices.

Edge AI, also known as edge intelligence or on-device machine learning, applies edge computing and the Internet of Things (IoT) to shift ML tasks from the cloud to local devices close to the data source, such as cameras. With the massive and still exponentially increasing volume of data created at the edge, AI must process and interpret information in real time while preserving the confidentiality and security of visual data.

3. Hardware AI Accelerators

The tech world is witnessing rapid growth in high-performance, deep-learning chips that deliver greater energy efficiency and operate on compact devices and edge systems. Popular deep learning AI hardware today includes edge computing units such as embedded computers and SoC platforms like the Nvidia Jetson TX2, Intel NUC, and Google Coral.

Neural network AI accelerators attach to embedded computing systems. The most common hardware AI accelerators for neural networks include the Intel Myriad X VPU, Google Coral, and Nvidia NVDLA.

4. Real-Time Video Analytics

Traditional machine vision systems often rely on specialized cameras and tightly controlled conditions. In contrast, modern deep learning models provide greater resilience, easier retraining and reuse, and enable the creation of new, unique applications in almost any industry.

Modern deep-learning computer vision techniques process video feeds from ordinary, low-cost security cameras or webcams to deliver advanced AI-powered video analytics.

Benefits of Computer Vision

Breakthroughs in deep learning techniques have boosted the precision and efficiency of computer vision systems. Essential elements in computer vision, such as open-source frameworks and cloud service platforms, have also lowered costs and broadened availability. As a result, developers and organizations of every size now create computer vision solutions.

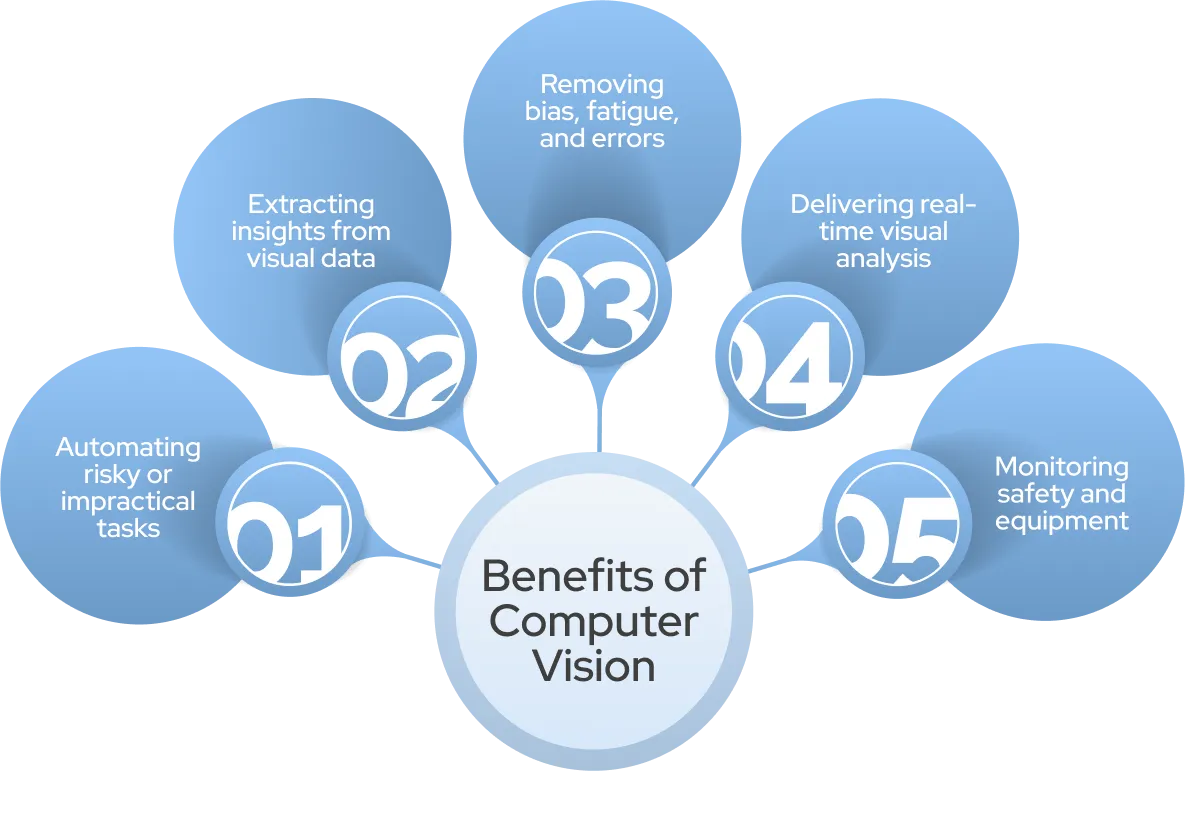

Computer vision powers systems that tackle practical challenges in everyday life, like:

- Automating and expanding processes that prove dangerous or unrealistic for people to perform

- Deriving business intelligence from visual analytics to guide choices and strategic planning

- Eliminating human bias, fatigue, and mistakes by handling repetitive tasks with reliable accuracy

- Enabling real-time solutions by analyzing visual information far faster than human vision

- Overseeing environments and machinery to maintain safety and prevent mishaps

Challenges of Computer Vision

Although the rise of cloud computing and open-source technologies has expanded access to computer vision, creating your own computer vision applications remains difficult. The technology is complex and demands significant funding and resources. Even though it delivers measurable advantages, deploying computer vision systems can intensify key technical obstacles such as visual data accuracy and variety, dimensional complexity, inconsistent data labeling and classification, raise ethical concerns, and place a spotlight on organizational preparedness.

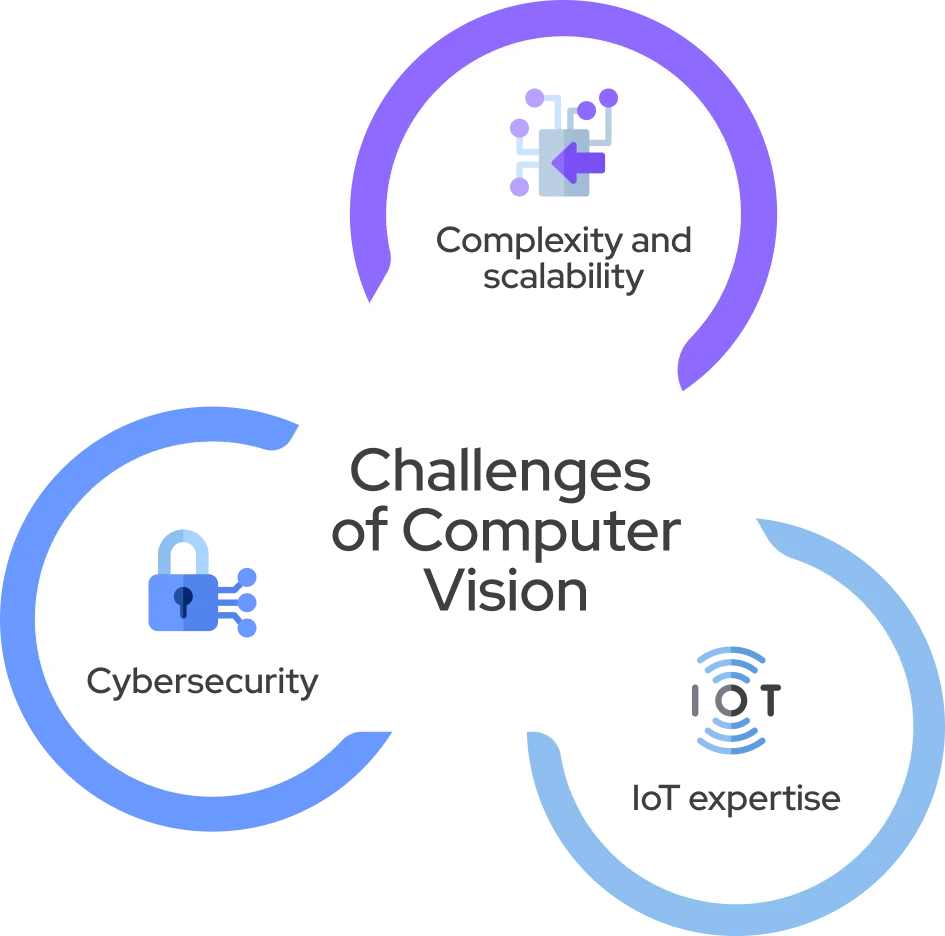

Many companies encounter obstacles with computer vision and its applications before they can build strong, reliable systems due to:

- Complexity and scalability — Running machine learning operations (MLOps) demands AI and ML specialization, which most companies lack in-house.

- Cybersecurity — Handling massive volumes of data requires enforcing strict practices in confidentiality, protection, and regulatory compliance.

- IoT expertise — Computer vision initiatives demand Internet of Things (IoT) solutions and integrations, which are best managed by an experienced IoT provider.

Book a Free Demo of ViSRUPT | AiFA Labs’ Edge Vision AI Platform

Fortunately, companies do not have to tackle a computer vision deployment alone. AiFA Labs draws upon years of experience and specialized expertise in every economic sector. Organizations from all industries rely on AiFA Labs for artificial intelligence services, cybersecurity guidance, and IoT solutions to turn their computer vision goals into reality. We help businesses master computer vision while managing risks, reducing losses, and boosting operational efficiency. Book a free online demonstration of our innovative Edge Vision AI Platform, ViSRUPT, or call AiFA Labs at (469) 864-6370 today!

FAQ

Computer vision is a technology that enables machines to automatically identify images and describe them with precision and speed. Today, computer systems access massive amounts of pictures and video streams collected from or generated by smartphones, traffic cameras, surveillance networks, and many other devices.

For computer vision, Python and C++ are the best programming languages. If you are starting out, Python is ideal due to its simplicity and powerful libraries.

OpenCV is a commonly used open-source AI framework built for computer vision projects. It serves as an excellent entry point for newcomers because it allows developers to quickly carry out computer vision tasks like image filtering, transformation, and basic feature recognition. Using OpenCV, you can begin by practicing essential image processing methods such as resizing, cropping, and edge detection, which establish the groundwork for more advanced applications.